Most marketers agree that an approach of test and learn is an essential part of the job, but in reality testing is often limited by time and resource. As teams become busier, testing slips down the priority list.

Don't miss out on maximising your return of effort

To help show this here's a real test run recently. Can you guess the winner?

Test Hypotheses: An animated image will increase reader engagement measured by clicks.

Version A: Static image

Version B: Animated image

and the winner is.......version B.

Increasing clicks for this content block by over 27%

Clicks on the image jumped from 25% of all clicks in the static version to 36% in the animated version.

So given the immediate impact that testing can have on results, why is it rarely at the top of the marketing agenda?

Testing maximises the return on your resources

Testing ensures that all that effort of creating and sending emails is maximised to provide ever increasing results. Having a structured and intelligent approach to your testing will ensure that effort is focussed in the areas that will make the most difference quickest.

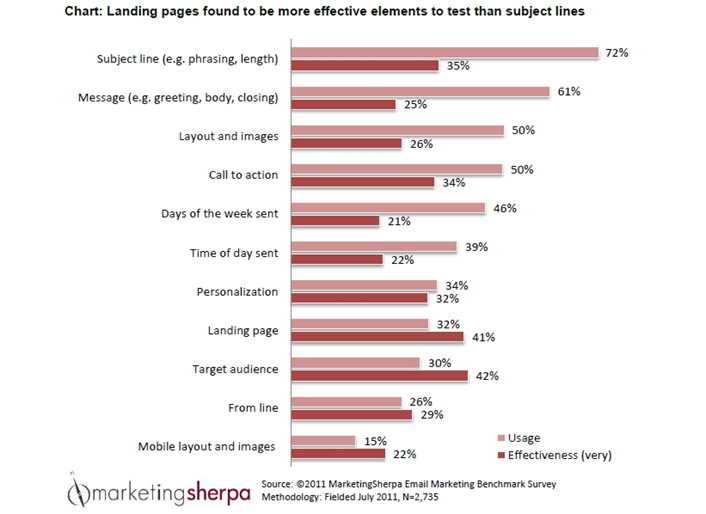

Interestingly, according to Marketing Sherpa, subject line was the most common test that email marketers carried out, but was in fact the least impactful. 72% of the respondents consistently deployed tests on subject line but reported that this test only created a strong improvement 35% of the time.

In contrast, audience segmentation and landing page were only tested c30% of the time but created strong improvement in over 40% of cases. Take a look at the graph below to see the full results:

A 5 step approach to testing

Here at Jarrang we're passionate about testing. It ensures that we are constantly thinking about how we can help clients improve their return on marketing investment.

To ensure that the effort of testing delivers results, we follow this simple 5 step approach.

1. Form a hypothesis

What are you testing? What change are you looking for? How specifically will you measure this?

Being really clear as to the reason for the test stops you a) testing for the sake of testing and b) testing stuff to see if it makes a difference and by this, achieving nothing.

The more specific you are with your hypothesis, the better the results for you to analyse. For example, "A darker CTA button will create greater visual impact and so engagement. This will result in more clicks than the current standard pale CTA button."

2. Confirm your KPIs

These are the metrics used to monitor and inform if the test has been successful:

- Opens

- Clicks

- Click-to-open

- Conversion rate

- Average order value

- Total revenue

- Return of investment

Again be really clear as to what you are measuring and why, based on what success should look like for this send. Is it click or conversion. Total clicks or unique clicks for a specific content area.

This is really important in driving the business benefits you want to achieve. A higher click may not create a higher conversion (revenue). So if revenue is the end goal, then this should be the measure by which the test is judged.

3. Confirm testing requirements and timings

This includes the date of the test send as well as any follow up sends to prove/disprove the result. You might need to allow for some additional time if your test involves extra design work or creative development.

Depending on the type of test and hypothesis, you may need to consider running the test over multiple campaigns/weeks to ensure the results are valid (see below for more on this)

4. Perform the test

5. Record results and analyse performance

Make sure that you spend time gaining the insights from the test. What have you learnt? Can you now adopt this learning as your new standard approach. Or do you need to test again to confirm or deny the likelihood of the same result again. Known as your statistical confidence.

Statistical robustness

The larger your sample size, the more sure you can be that the response truly reflects the whole audience behaviour. Based on the variance in each test result (or required result) you can then predict the confidence that this will happen each time based on this sample size.

This balance of sample size and variance of result from each version will also help define which approach to A/B testing you should take.

10% version A : 10% version B THEN 80% shown the winner.

This approach means that the majority of your audience will see the stronger performing version - so maximising the results of that email.

Some email clients will automate this process based on the agreed success measure, often open or click rate. So you can set up the test versions and the criteria and let the machine do all the rest of the work.

50% : 50% send to all

Here you split your data and send each half a version and then make your review once the send results are completed, optimising for the next send.

Some reasons why this approach will be needed include:

- If you can't automate the 'winner stays on' a lack of resources may prohibit the first approach.

- If you're not sure that the variance in results will be high and so need volume to help reach statistical significance

- If the final success measure is not based on open or click. If revenue is the final objective, but this data sits outside of the marketing platform and can not be analysed within the final send deadline.

Record your results and share with the wider marketing team

Being disciplined in recording your results ensures that over time you can track what has been tested and why your current approach exists. In a year's time will the same team be in place? A clear record of past tests will really help your future team.

And share the results across your business. One of the real benefits of testing with email is the speed and clarity of response in your testing. What you learn about your readers preferences in email may well benefit your other marketing channels and sales teams as well.

Test what will make a difference

It's imperative to carry out tests that will really allow you to see how your audience reacts to your emails in the best possible way. Here are some example of some interesting tests to carry out:

- Use of animation

- Inclusion and position of social icons and social share

- Friendly from / pre-header

- Customer segments

- Dynamic content

- Geo specific content

- Short v long form content

- No hero image / big hero image

- Video content

- CTA buttons - look, position, quantity

Maximise the return on effort through testing

Through a series of tests run across the year, a well known budget hotel chain was able to completely optimise their black friday send from the year before. Through a series of tests including use of:

- Countdown timers

- Discount levels

- Recommendations options

the marketing team learnt what to keep and what to change across a number of sections of the email. This optimisation across the year enabled them to create a black friday send that (compared to the previous year) led to an improvement in performance including +290% click and +79% booking conversion.

*****

If you really want to drill down into the behaviour of your customers/recipients you need to plan out some ideas for testing. Think about the objective of the campaign - what is it that you want the person to do when they open your email? This will inform the basis of your testing. Want more clicks? Analyse your CTA placement. Want more opens? Trial personalisation in the subject line.

Whichever test you decide to run, follow the listed five steps to ensure you get worthwhile results that will drive your overall email marketing strategy. Or talk to us, we'd love to help you begin the testing journey to ever improving results.

Subscribe to our insights newsletter

Be the first to know what's trending in email and CRM.

Share this story

Related insights

Article 5 min read